What is IndexNow?

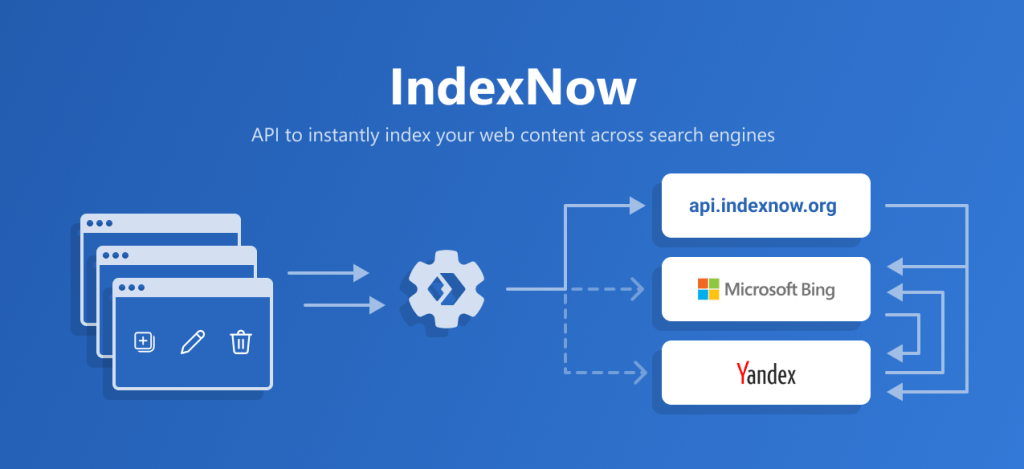

IndexNow is a website protocol, designed and maintained by Microsoft Bing in collaboration with Yandex, that enables website owners to instantly notify search engines (including Google, Bing, and others) about changes they make to their website.

IndexNow is a simple notification to search engines, letting them know that a URL and its content have been added, updated, or deleted. This enables search engines to quickly reflect this change in their search results, resulting in overall more efficient crawling.

Does Google support IndexNow?

Google supports IndexNow API to the degree that they’ve announced that they will test the IndexNow API , however, there have been no updates since.

There have been talks at Google in relation to how to improve the crawl efficiency, and as a result, make crawls more sustainable, so in theory – IndexNow API fits into this aim Google has for reducing crawl rate.

How does IndexNow work?

Essentially, the protocol processes the submitted URLs, and updates via notifications to all the search engines. The promise of IndexNow is to submit a URL to one search engine via this protocol and not only will that search engine immediately discover that URL, but it will also be discovered on all the other participating search engines.

The principle of co-sharability of URL discoveries is what makes the protocol revolutionary, as well as improves the overall sustainability of the web, which is why it is referred to as the future of web crawling. It also eases the strain on webmasters and website owners as they only require submitting URLs to this one protocol, as opposed to keeping updated all the different search engines in the marketplace.

How can you start using IndexNow API?

In order to start using IndexNow, or otherwise before you begin, you need to:

- Generate a key supported by IndexNow

- Host the key in a text file named with the value of the key at the root of your website and provide it as part of your query

- Start submitting URLs when your URLs are added, updated, or deleted.

Where can you get your IndexNow API key from?

Generating a key is extremely easy and can be done online for free. You can generate a key supported by the IndexNow protocol using Microsoft’s online key generation tool.

How to authenticate with IndexNow API?

To submit URLs, you must “prove” ownership of the host for which URLs are being submitted by hosting at least one text file within the host. Once you submit your URLs to search engines, search engines will crawl the key file to verify ownership and use the key until you change the key. Only you and the search engines should know the key and your file key location.

The easiest way to verify ownership is via Hosting a text key file at the root directory of your host.

You must host a UTF-8 encoded text key file {your-key}.txt listing the key in the file at the root directory of your website.

For instance, for the previous examples, you will need to host your UTF-8 key file at https://www.example.com/87ee692678e54f28a2f0db03d36f2636.txt and this file must contain the key 87ee692678e54f28a2f0db03d36f2636

Are there any limitations to the number of URLs that can be submitted?

Yes, you can submit no more than 10, 000 URLs with a single request to IndexNow API.

You can issue the HTTP request using wget, curl, or another mechanism of your choosing. A successful request will return an HTTP 200 response code; if you receive a different response, you should verify your request and if everything looks fine, resubmit your request. The HTTP 200 response code only indicates that the search engine has received your set of URLs.

The recommended way is to automate the submission of URLs as soon as the content is added, updated, or deleted up to some limit

How can you submit URLs for Submission via IndexNow?

You can submit URLs individually or in bulk.

To submit a single URL

To submit a URL using an HTTP request (replace with the URL provided by the search engine), issue your request to the following URL:

https://<searchengine>/indexnow?url=url-changed&key=your-key- url-changed is a URL of your website which has been added, updated, or deleted. URL must be URL-escaped and encoded and please make sure that your URLs follow the RFC-3986 standard for URIs.

- Your-key

You can issue the HTTP request using your browser, wget, curl, or any other mechanism of your choosing.

A successful request will return an HTTP 200 response code; if you receive a different response, verify the reason for this (see Section re: Response codes) and resubmit the request.

The HTTP 200 response code only indicates that the search engine has received your URL.

What can you expect after submitting your URLs for indexation via IndexNow?

IndexNow protocol will update search engines to crawl the updated or new URLs, and you can monitor the indexation and ranking performance via the common tools. There is no method of notification once a URL has been submitted, besides the response code you receive upon submission.

Can IndexNow be used for URLs that were updated or is it only for new page submissions?

Absolutely, IndexNow can be used for notifying search engines that a page has been updated. In fact, it can be used for notifications of content being added, updated, or deleted. This message you send via the protocol allows search engines to quickly reflect this change in their search results.

What challenges does IndexNow alleviate for web users and website owners?

The IndexNow API can resolve challenges, related to:

- High rates of not discovered and not crawled URLs

- URL discoverability of new URLs in poorly interlinked websites

- Change detection in URLs in large websites

What are the benefits of using Index Now API?

With IndexNow API, you can expect:

- Faster Submission to Indexation

- As a result, better organic performance

The IndexNow protocol is especially useful for websites, whose pages update frequently, or large, enterprise-level websites with poor internal linking structure and poor URL discoverability rates.

When submitting URLs, what responses can you expect from the API and what do they mean?

The IndexNow Documentation specifies six response codes you could get from the API once you’ve submitted your URLs:

- 200 – OK, Success

- 202 – Accepted, IndexNow Key Validation pending

- 400 – Bad Request, Invalid Format

- 403 – Forbidden, key-related (authentication) issue observed

- 422 – Unprocessable entity, URLs don’t belong to the host (domain) in the key, or key does not match protocol schema

- 429 – Too Many Requests – potential spam

How to bulk-submit URLs for indexation via IndexNow API?

Crawl Your Sitemap with Python and Sheets, Check for Indexation with AppScript and Bulk-Submit Non-Indexed URLs to IndexNow

(1) Crawl Your Sitemap Via Python & Store In Google Sheets

!pip install advertools

!pip install update gspread

import advertools as adv

import pandas as pd

from lxml import etree

import requests

from IPython.core.display import display, HTML

display(HTML("<style>.container { width:100% !important; }</style>"))

sitemap_url = "ENTER YOUR URL SITEMAP CSV"

sitemap = adv.sitemap_to_df(sitemap_url)

sitemap.to_csv("sitemap.csv")

sitemap_df = pd.read_csv("sitemap.csv", index_col=False)

sitemap_df.drop(columns=["Unnamed: 0"], inplace=True)

sitemap_df

#authentication to gsheets

from google.colab import auth

auth.authenticate_user()

import gspread

from google.auth import default

creds, _ = default()

gc = gspread.authorize(creds)

#create sheet

sh = gc.create('ENTER THE TITLE OF YOUR WORKSHEET')

# Open our new sheet and add some data.

worksheet = gc.open('ENTER THE TITLE OF YOUR WORKSHEET').sheet1

#replace nan with empty values

sitemap_df.fillna('', inplace=True)

#convert sitemap df to list

sitemap_df_list = sitemap_df.to_numpy().tolist()

#print(sitemap_df_list)

#convert headers to list

headers=sitemap_df.columns.to_list()

sitemap_df_towrite = [headers] + sitemap_df_list

worksheet.update("A1", sitemap_df_towrite)

# Go to https://sheets.google.com to see your new spreadsheet.(2) Check Whether URLs have been Indexed via Appscript Formula or with Python

To check whether your URLs have been submitted, you can use this formula via AppScript, and enter into the cell next to the URL the formula =ispageindexed(cellreference).

As an example, if your URL is in cell A2, the formula you enter in B2 to check whether it has been indexed would be

=isindexed(A2).

Then, just drag it down to check for all URLs.

function isPageIndexed(url)

{

url = "https://www.google.com/search?q=site:"+url;

var options = {

'muteHttpExceptions': true,

'followRedirects': false

};

var response = UrlFetchApp.fetch(url, options);

var html = response.getContentText();

if (html.match(/Your search -.*- did not match any documents./) ){

return "URL is Not Indexed";

}

else {

return "URL is Indexed";

}

}One caveat here is that this would work for smaller sitemap checks but not bigger ones. Or otherwise, if you have a sitemap of less than 10K URLs this will work, but for a sitemap of more – it will be cumbersome to do this process in Google Sheets via this formula.

In such cases, it would be best to transform this formula and use it with Python to get the indexation statistic.

(3) Submit your non-indexed URLs via IndexNow API

Download your list of Noindexed URLs and save that into JSON format. Then use the Python code below to submit your URLs for indexing.

#enter your key

key ='ENTER YOUR API KEY'

#reference your no_indexed_list, preferably in JSON format

with open('FILEPATH OF YOUR NOINDEXED LIST JSON FILE') as f:

not_indexed_list = json.loads(f.read())

#submit to indexnow

data = {

"host": "www.bing.com",

"key": key,

"urlList": not_indexed_list

}

headers = {"Content-type":"application/json", "charset":"utf-8"}

r = requests.post("https://bing.com/", data=data, headers=headers)

print(r.status_code)Get a list of Not Crawled and Not Indexed URLs via Oncrawl (or equivalent tool) and Bulk-Submit Non-Indexed URLs to IndexNow API

If you want to save yourself a bit of hassle, you can also simply download a list of the not discovered and not indexed URLs via Oncrawl (or equivalent tool, e.g. Bofity or any other that reports on log files and bot behavior).

Using Oncrawl, as an example, this is easy to find via the Ranking > Ranking & Googlebot Report, where you can directly download a list of such URLs.

When you reach the report, titled Pages crawled by Google: ratio of ranking pages, you can click on all of the dark grey patterns to view the list of URLs for each of your designated page groups.

Then, remove all other columns besides the URL, and export the data in JSON format.

If your file is too big (remember, IndexNow only allows you to submit up to 10,000 URLs), you can split it using this function in Python.

#you need to add your JSON file path here

with open(os.path.join('ENTER YOUR FOLDER PATH HERE', 'ENTER THE NAME OF THE FILE YOU DOWNLOADED FROM ONCRAWL.jsonl'), 'r',

encoding='utf-8') as f1:

ll = [json.loads(line.strip()) for line in f1.readlines()]

#this is the total length size of the json file

print(len(ll))

#in here 10000 means we getting splits of 10000 urls in each JSON file

#you can define your own size of split according to your need

size_of_the_split=10000

total = len(ll) // size_of_the_split

#in here you will get the Number of splits

print(total+1)

for i in range(total+1):

json.dump(ll[i * size_of_the_split:(i + 1) * size_of_the_split], open(

"ENTER THE FOLDER PATH WHERE YOU WANT THE FILES TO BE DOWNLOADED" + str(i+1) + ".json", 'w',

encoding='utf8'), ensure_ascii=False, indent=True)

Then you can use the same function as above to submit the URLs to IndexNow, adding a custom looping function that submits the mini-JSON files only after getting a successful response from the API after the submission of each previous file.

I’ve saved you a bit of hassle and created a script that runs based on input, using the above-mentioned process. Check it out on my github.

Track Indexation progress with Google Data Studio

So, you’ve submitted the URLs to the API. Now what?

Well, it’s time for the most important step – progress tracking.

This is where the Google sheet with the URLs submitted will come in handy, but rest assured as even if you’ve used the second method by extracting URLs from Oncrawl, you can export them as CSV and upload them as a Google Sheet in your drive. You will use this list in order to blend in Google Data Studio with Google Search Console data source, and check:

- how fast your URLs have been indexed after the submission date

- how many impressions, and clicks are they getting

- how many URLs have been indexed after you’ve submitted them via IndexNow API

- how many queries do the URLs you’ve submitted rank for

Data Studio is perfect for this type of work as it can enable you to slice the data based on top-level and sub-level directories vie custom dimensions, and also monitor time-series performance after the indexation.

Your Data Studio Dashboard would need just two data sources added:

- A Google Sheet with One column – the list of all submitted URLs to IndexNow API

- Google Search Console Data Studio Connector (URL)

In order to create this view, you need to use a blended data source between the list of URLs and Google Search Console, blended as follows:

With the join being left outer join, configured on the URL <> Landing page join field. This enables you to only monitor the rankings and indexation on URLs that are submitted as part of the test.

For the Indexed? Y/N field, use the following formula:

case when Impressions>0 then 1 else 0 endAnd that’s it!

You are all set to track indexation on your submitted URLs.

How else can you submit your URLs (IndexNow plug-ins and partners)?

IndexNow PlugIn for WordPress

When adding the WordPress IndexNow plugin you enable automated submission of URLs from WordPress sites to the multiple search engines without the need to register and verify your site with them.

Once installed, the plugin will automatically generate and host the API key on your site, speeding up the process of setting up and submitting URLs significantly. This plug-in is a must-have for all webmasters to add to their arsenal, significantly speeding up SEO results in relation to content discoverability.

Cloudflare Support for IndexNow via Crawler Hints

Crawler Hints is a service, developed by Cloudflare, part of which supports and integrates with IndexNow protocol URL submissions. Is free to use for all Cloudflare customers and promises to revolutionize web efficiency. If you’d like to see how Crawler Hints can benefit how your website is indexed by the world’s biggest search engines, please feel free to opt into the service:

- Sign in to your Cloudflare Account.

- In the dashboard, navigate to the Cache tab.

- Click on the Configuration section.

- Locate the Crawler Hints sign-up card and enable it

Once you’ve enabled it, we will begin sending hints to search engines about when they should crawl particular parts of your website. Crawler Hints holds tremendous promise to improve the efficiency of the Internet.

Thanks so much for reading and let me know how you get on with this tutorial in the comments.