Topic modeling is one of the natural language processing techniques I find most needed in my role. In more recent times, I’ve seen it popularised as entity extraction, however, the aim of it remains the same — pattern recognition in a corpus of text.

In this article, I will do an in-depth review of the techniques that can be used for performing topic modeling on short-form text. Short-form text is typically user-generated, defined by lack of structure, presence of noise, and lack of context, causing difficulty for machine learning modeling.

This article is part two of my Sentiment Analysis deep dive and is compiled as a result of a systematic literature review on topic modeling and sentiment analysis I did when studying for my Master’s degree at the University Of Strathclyde.

What is Topic Modelling in Machine learning – An Overview

Topic modeling is a text processing technique, which is aimed at overcoming information overload by seeking out and demonstrating patterns in textual data, identified as the topics. It enables an improved user experience, allowing analysts to navigate quickly through a corpus of text or a collection, guided by identified topics.

Topic modeling is typically performed via unsupervised learning, with the output of running the models being a summary overview of the discovered themes.

Detecting topics can be done in both online and offline modes. When done online, it aims to discover dynamic topics over time as they appear. When done offline, it is retrospective, considering documents in the corpus as a batch, detecting topics one at a time.

There are four main approaches to topic detection and modeling:

- keyboard-based approach

- probabilistic topic modelling

- Aging theory

- graph-based approaches.

Approaches can also be categorized by techniques used for topic identification, which creates three groups:

- clustering

- classification

- probabilistic techniques

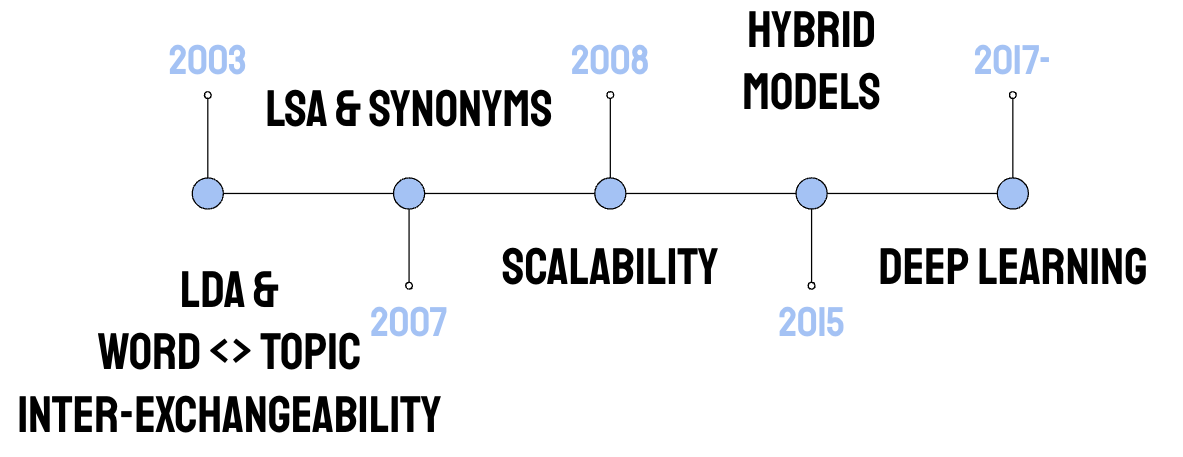

How did topic modeling come about?

According to the author of the first topic modeling algorithm, there was a problem. As collective knowledge increases, the discovery of information gets more and more difficult. The solution, in his opinion, was computational tools that can be implemented to help organize, search and understand the vast amount of information.

Another problem he saw is that the current systems of query and links are good, but they can be very limiting. And the solution, in his opinion, was that we need systems to organize documents based on themes or otherwise -Topics and Subtopics.

The final problem he saw is that there is no ability to Zoom in and out of topics of interest and to highlight patterns within these topics. And the solution, he thought, was that we need topic models that operate under the assumption that a document has multiple subtopics within it.

And this is how the first topic modeling algorithm was born. That is called LDA.

1. Latent Dirichlet Allocation — LDA

LDA (Latent Dirichlet Allocation) is a Bayesian hierarchical probabilistic generative model for collecting discrete data. It operates based on an exchangeability assumption for words and topics in the document. It models documents as discrete distributions over topics, and later topics are regarded as discrete distributions over the terms in the documents.

The original LDA method uses a variational expectation maximization (VEM) algorithm to infer topics for LDA. Later on, stochastic sampling inference based on Gibbs sampling was introduced. This improved the performance in experiments and has since been used more frequently as part of models.

Blei and their colleagues, who first introduced LDA, demonstrate its superiority against the probabilistic LSI model. LSI (Latent Semantic Indexing) uses linear algebra and bag-of-words representations for extracting words with similar meanings.

Now, the idea behind LDA is that you have different words that can stand as descriptors for a particular topic, and the algorithm actually calculates the probability of each word in this document aligning to one of the main core topics that the algorithm identifies.

And what this allows is someone like us coming in and looking at these different words that describe a particular subtopic in a document and giving them names like what do they mean? What do these terms represent? What is the name of this topic? And then seeing how often are these different subtopics represented as part of the given document. Topic modeling in its nutshell, at its core, is pattern recognition is enlarged text-based corpora of data.

Benefits of LDA — What is LDA good for?

1. Strategic Business Optimization

LDA was most commonly listed as part of models amongst all reviewed techniques and is considered of value for strategic business optimization.

Here, I’d like to specifically mention the role and huge impact that LDA can play for SEOs, as it can help understand large website structures, topics, and subtopics quickly, helping to establish a basic structure of internal link efforts and internal link optimization, which in turn can help increase a website’s visibility in search engines and organic performance.

2. Improve competitive advantage via a better understanding of user-generated text.

A 2018 study demonstrates the value of LDA as a method of improving a company’s competitive advantage by extracting information from user online reviews and subsequently classifying topics according to sentiment.

3. Improve a company’s understanding of its users.

LDA-based topic modeling has been used also to characterize the personality traits of users, based on their online text publications.

In my own study, I used LDA topic modeling to categorize users in stages of their customer journey, based on short, user-generated text they posted on social media in relation to a product or company.

4. Understand customer complaints and improve efficiency in customer service.

In a 2019 study, LDA topic modeling was used to analyze consumer complaints in a consumer financial protection bureau. Predetermined labels were used for classification, which improves the efficiency of the complaint handling department through task automation.

Limitations of LDA — What is LDA criticized for?

Inability to scale.

LDA has been criticized for not being able to scale due to the linearity of the technique it is based on.

Other variants, such as pLSI, the probabilistic variant of LSI, solve this challenge by using a statistical foundation and working with a generative data model.

2. Assuming document exchangeability

Although efficient and frequently used, LDA is criticized for its assumption of document exchangeability. This can be restrictive in contexts where topics evolve over time.

3. Commonly neglecting co-occurrence relations.

LDA-based models are criticized for commonly neglecting co-occurrence relations across the documents analyzed. This results in the detection of incomplete information and an inability to discover latent co-occurrence relations via the context or other bridge terms.

Why is this important? This can prevent topics that are important but rare from being detected.

This criticism is also shared in the analysis of a later study, where the authors propose a model specifically tailored for online social networks topic modeling. They demonstrate that even shallow machine learning clustering techniques applied to neural embedding feature representations deliver more efficient performance as compared to LDA.

4. Unsuitable for short, user-generated text.

Hajjem and Latiri (2017) criticize the LDA approach as unsuitable for short-form text. They propose a hybrid model, which utilizes mechanisms typical for the field of information retrieval.

LDA-hybrid approaches have also been proposed to address these limitations. However, even they perform sub-optimally on short-form text, which brings to question the efficiency of LDA in noisy, unstructured social media data.

2. Hybrid LDA Methodologies

To address some of the highlighted limitations of LDA, models that learn vector representation of words were introduced.

By learning vector representations of words and hidden topics, they are justified to have a more effective classification performance on short-form text.

Yu and Qiu propose a hybrid model, where the user-LDA topic model is extended with the Dirichlet multinomial mixture and a word vector tool, resulting in optimal performance, when compared to other hybrid models or the LDA model alone on microblog (i.e. short) textual data.

Another conceptually similar approach can be applied to Twitter data. This is the hierarchical latent Dirichlet allocation (hLDA), which aims to automatically mine the hierarchical dimension of tweets’ topics by using word2vec (i.e. a vector representations technique). By doing so it extracts semantic relationships of words in the data to obtain a more effective dimension.

Other approaches such as the Non-negative matrix factorization (NMF) model have also been recognized to perform better than LDA on short text under similar configurations.

3. LDA alternatives

Except for LDA, there are numerous other developments in the field of topic discovery. Considering the lack of academic attention they have received, they appear to have critical limitations that remain unaddressed.

In a 2017 study, a hierarchical approach for topic detection is proposed where words are treated as binary variables and allowed to appear in only one branch of the hierarchy. Although efficient when compared to LDA, this approach is unsuitable for application on UGC, or social media short text, due to the language ambiguity, which characterizes the data.

A Gaussian Mixture Model can also be used for topic modeling of news articles. This model aims to represent text as a probability distribution as means to discover topics. Although better than LDA, it again will likely perform less coherently in the topic discovery on UGC short texts. The reason is the lack of structure and data sparsity of short-form texts.

Another model based on Formal Concept Analysis (FCA) was proposed for topic modeling of Twitter data. This approach shows the facilitation of new topic detection based on information coming from previous topics. Yet, it fails to generalize well, meaning that it is unreliable and sensitive to topics, which it has not been trained on.

Other models, such as the TG-MDP (topic-graph-Markov-decision-process), consider semantic characteristics of textual data, as well as automatically select optimal topics set with low time complexity. This approach is suited only to offline topic detection (which as mentioned earlier is less common). Even so, it offers promising results, when benchmarked against LDA-based algorithms (GAC, LDA-GS, KG).

What can these models be used for? — you might be wondering.

- detecting emerging topics in microblogging communities like Twitter, Reddit, and other sites

- observing topic evolution over a period of time

How to Quickly Get Started with Topic Modelling using LDA

We can use one of these tutorials below to kickstart a topic modeling journey, using Python for the scripting:

- Topic Modeling in Python: Latent Dirichlet Allocation (LDA) by Shashank Kapadia

- Linear Discriminant Analysis With Python by Jason Brownlee

- Latent Semantic Analysis using Python by Avinash Navlani

- Topic Modelling in Python with NLTK and Gensim by Susan Li

Or you can just skip the code and jump straight in.

I recorded a video to show you step by step tutorial on how to do topic modeling using a no-code, publicly available web-based application that uses LDA that was originally developed by Cornell.

In a nutshell, this video will show you how to crawl and export content on your website, how to upload your files to the web app, and fine-tune the model’s performance, and also download the files and explore them and build these files into your deliverable of the internal linking audit.

In the end, you’re going to have a couple of files, one of them showing the topic to topic similarity, how often the different topics identified on your website co-occur, or whether some of them should not be related at all don’t co-occur on the site. Another part of the output of this is that you’re going to have the belonging of each content on your site, each page based on a particular topic.

Why should you do this?

- You will get a baseline overview of the website (or any other kind of text-based corpus of data), regardless of its size in less than 30 minutes.

- You will save yourself a ton of time

- You will test something new, risk-free, and if you are a newbie in the machine learning world – code-free, too!

To recap, although there are many approaches to topic modeling, LDA has evolved in being the most commonly used.

Considering its limitations, many hybrid approaches have been subsequently developed to improve topic accuracy and relevancy. These methodologies often challenge LDA’s probabilistic hierarchical structure.

Non-LDA approaches have also been developed, however, they are not well suited to short-form text analytics.

Up next, check out my analysis of the best topic modeling algorithms to use on short-form text and the respective limitations of doing so.