Edit: Later on in this dashboard I will present a dashboard and promise to update you once a new version of it arrives. Well, in September 2021, I updated the dashboard – you can check it out in my article about Accelerating Page Experience And Core Web Vitals Reporting With Data Studio.

Core Web Vitals page experience metrics were introduced as a ranking factor this June (Google released an update for delaying the algorithm change until mid-June, after initially suggesting it will be released in May).

There are two methods of auditing and various tools, each with its own limitations and strengths. This makes traditional reporting complex, holistic, and static.

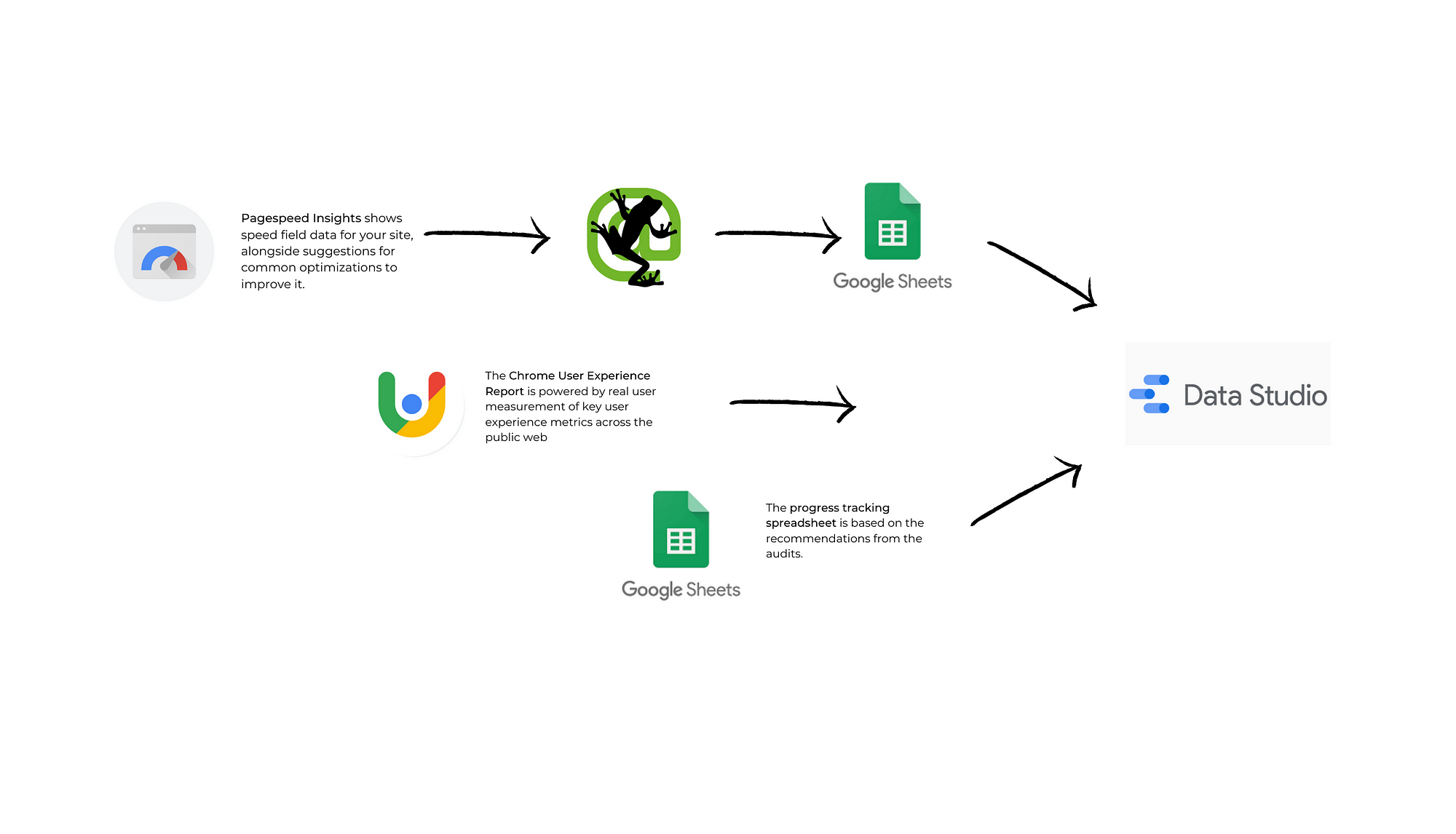

I built a Data Studio dashboard, using the CrUX Data Studio Connector (Chrome User Experience) and Pagespeed Insights API, to enable tangible and actionable recommendations for all three metrics for a page-level, section-level, and site-level basis.

As a result, I enable my clients to not only track their performance but also their progress, empowering them with a progress tracking sheet, which has detailed execution advice, as well as prioritization, based on the executive assessment. This enables mapping the impact of the changes made to the site to the monthly performance reports.

The entire framework is available for site owners and SEOs to use following just five simple steps.

Background

Core Web Vitals are becoming a ranking factor in May this year. This effort ensures the measurement of the perceived page experience of users.

As SEOs, we’ve been hearing and preparing for this over the past year, since this announcement was first made.

In case this is your first time hearing about this, the Core Web Vitals are a set of metrics Google has been using to assess the site speed, responsiveness, and visual stability.

Initially, there are three CWV metrics that Google will use to allocate rankings:

- Largest Contentful Paint — a measure of the overall loading speed of a page.

- The First Input Delay (FID) — the page’s responsiveness to user interactions.

- The Cumulative Layout Shift (CLS) — the stability of a URL as it loads.

To provide a good user experience, LCP should occur within 2.5 seconds of when the page first starts loading, pages should have an FID of less than 100 milliseconds. Pages should maintain a CLS of less than 0.1.

I wouldn’t want to spend too much time on the definitions and the way these metrics are calculated. There are many other brilliant writers out there, who have done this perfectly, such as Billie Geena, Phil Isherwood, and Tom Rankin to name just a few.

What I do want to do is provide a dashboard that will make the auditing process of large websites easier and actionable, as well as an approach to automating the entire process.

Before we begin, though I just want to share some of the limitations of the approaches we have for auditing CWV at the moment, and how the idea of this dashboard was born.

Auditing Core Web Vitals: Methods, Tools, Limitations

There are two methods for auditing Core Web Vitals:

- Field data, or otherwise — Real user monitoring (RUM)

- Lab data — performance data collected in a controlled environment with a predefined device and network settings, aka Emulated data

The tools that can be used to measure CWV performance are:

- PageSpeed Insights — provides URL-level user experience metrics for popular URLs that are known by Google’s web crawlers.

- Chrome UX Report — powered by real user measurement of key user experience metrics across the public web

- Search Console — provides groupings of pages that require attention, based on similar issues

- Chrome DevTools and Lighthouse — provide detailed measurement and guidance on fixing Core Web Vitals issues.

- WebVitals Extension — gives a real-time view of metrics on the desktop.

This awesome infographic shows the strengths and weaknesses of lab and field data, a description of the tools you can use for auditing, as well as the user profile of the ideal consumers of each of the tools. I highly recommend checking it out before moving on.

The Challenge

Web. dev recommends using all of the above tools as part of the assessment process, however, integrating all tools for site-wide assessment of large sites is near impossible.

So when I first started researching the Core Web Vitals in November I was working with clients in the B2B sector. I embedded a detailed ChromeUX report in each of their dashboards. I also included a competitor analysis (shout out to Neal Cole’s blog post for the inspiration), again by using the ChromeUX report.

However, we failed to see value in it beyond a holistic diagnosis and benchmarking tool.

Why?

Firstly, it is aggregated from users who have opted-in to syncing their browsing history, have not set up a Sync passphrase, and have usage statistic reporting enabled. This means it is sampled data, albeit based on the experiences of real users.

Secondly, it only provides a holistic view of the site’s performance, even though it is aggregated, using page-level data from users. This obstructs the diagnosis of individual pages with issues unless another tool is used.

So, to optimize for page speed, clients want tangible and actionable recommendations, which the ChromeUX report alone cannot provide.

Better yet — they want an interactive auditing system, which enables page-level, section-level, and site-level reporting, the discovery of emerging patterns, and progress-tracking.

And that is exactly what I built. Here’s the approach.

Part 1: A Core Web Vitals Data Studio Dashboard

You can access the Core Web Vitals DataStudio dashboard template from here.

Each of the three Core Web Vitals has a dedicated page in the dashboard. Each page has five key elements:

- An overview section

- Tables, showing page performance with recommendations for savings

- Recommendations on tackling the different groups of issues

- Pie charts and scorecards, showing affected pages as a number, and as a part of all analyzed pages.

- A testing ground

There is also dashboard documentation, which goes into detail about the build and set-up of charts in the dashboard.

Overview

At the top of the page there is an overview of the state of the site, using the two integrated APIs:

- The line graph shows the data from the Chrome UX Report, which feeds data from the CrUX API. The graph features data with predefined metrics, with the names, changed for better navigation.

- The pie chart shows a filtered view of the CrUX LCP Category Metric, extracted from the Screaming Frog site audit report, which uses the Pagespeed Insights API.

As there are some data entries that do not have a score, there is a filter applied, which excludes the values that are null.

Tables

The tables on the page show a direct extraction from the Screaming Frog site audit CSV, linked to the report, with the values colored, using a heatmap pattern.

Recommendations

The recommendation sections show the different categories of issues:

- Image optimization

- Server optimization

- Render optimization

- Resource optimization

- Javascript optimization, etc.

A description is provided to provide insight about how these can be achieved by utilizing the savings opportunities, illustrated in the tables.

Scorecards

The logic of creating the scorecards is to use the dimension, which holds the savings recommendation. This demonstrates a count of affected pages, which is filtered for null values or values less than one.

For instance, for the scorecard, titled “Offscreen Images”, the dimension used is Defer Offscreen Images Savings, set to show a count.

Pie charts

The pie charts display the context of the issues in separate categories.

The pie charts demonstrate the total number in the context of the rest of the pages on the site (as a percentage of the total number of examined pages).

They show an unfiltered view, demonstrating through the use of unassessed pages (null) or lack of potential savings (i.e. through the value 0), the scale of the issues. The aim is to assist with the prioritization of the tasks.

Testing Ground

The last part of the report includes a testing ground, where individual URLs can be tested for in-depth page-level assessments.

This idea was inspired by Jackie Jeffers’ blog post, titled: “Field Guide to Reporting on Core Web Vitals: Part 2”, where she shows the embedding method used.

You might be wondering — should I keep this page? Does it really add value?

Embedding the PageSpeed Insights via the URL Embed Tool enables report users to examine individual web pages to delve deeper into certain issues.

For instance, if we were to examine image optimization savings opportunities using the dashboard for a specific section and page, here are the steps to follow:

- Narrow the list of URLs to a section of the website (e.g. the blog).

- Sort by potential image savings.

- Copy the URL.

- Go to page 4 — Testing Ground.

- Paste the URL in the search box.

- Click “Analyze”.

- Scroll down to the opportunities.

- Expand “properly size image savings”.

- View the individual files that need to be resized for faster page loads.

Part 2: A Progress-Tracking Sheet

We’ve provided a progress tracking spreadsheet, based on the recommendations because we want to empower our clients to feel a sense of ownership of the changes they need to make.

The most important thing about this sheet is undoubtedly the implementation date. This can be used later on in the report to map out the impact that certain changes have had on the site.

This can enable the creation of time graphs, which use the date dimension of the crawls and the date dimension of the implementations to display Screaming Frog’s new feature of change detection.

Plug and Play – An Easy, 5-Step Process

- Filter the PageSpeed Insights API bot traffic in GA.

- Audit Core Web Vitals with Screaming Frog and export the audit data to a Drive spreadsheet.

- Automate the crawl by setting it up to reoccur.

- Copy the dashboard template.

- Establish the connections:

- Connect the Chrome UX report for your selected domain.

- Connect the Screaming Frog audit spreadsheet.

- Connect the Progress tracking sheet

Additional Notes

Filtering PageSpeed Insights API bot traffic in GA

Here is how to create a regex filter pattern in Google Analytics for the bot of Pagespeed Insights’ API before using it with Screaming Frog.

The PageSpeed Insights API renders web pages to get lab data about their performance. However, it has a limitation.

Its instances appear to be recorded in Google Analytics as direct traffic, even when the ‘Exclude all hits from known bots and spiders’ view setting is enabled.

According to Screaming Frog, Google is aware of the issue and is working to resolve it. In the meantime, to stop PageSpeed Insights from falsely bloating GA data we can use a filter to exclude known Google IP addresses.

Copy and paste this filter pattern in the exclude section in the Google Analytics Settings:

^66\.249\.(6[4–9]|[7–8][0–9]|9[0–5])\.([0–9]|[1–9][0–9]|1([0–9][0–9])|2([0–4][0–9]|5[0–5]))$

Null values

If you see a “No data available” screen, it means one of two things:

- Your property is new in Search Console.

- There is not enough data available in the CrUX report to provide meaningful information for the chosen device type (desktop or mobile).

If the web property is new, data might have not been gathered for it yet.

CrUX database gathers information about URLs whether or not the URL is part of a Search Console property. But it can take a few days after a property is created to analyze and post any existing data from the CrUX database.

Read more at Core Web Vitals report — Search Console Help

Data freshness

Google has confirmed that the data in the ChromeUX report will only be updated once per month — on the second Tuesday of every month. Google has already indicated there are no plans on making any changes to the schedule of these updates.

ScreamingFrog crawls can be scheduled more frequently.

Pagespeed Insights API <> ChromeUX Report API data discrepancies

The Chrome UX Report API gives low-latency access to aggregated Real User Metrics (RUM) from the Chrome User Experience Report.

Read more at Overview | Chrome UX Report.

The PageSpeed Insights API returns performance data about the desktop version of the specified URL by default.

Read more at Measure Core Web Vitals with the PageSpeed Insights and CrUX Report APIs.

The CrUX API only reports field user experience data, unlike the existing PageSpeed Insights API, which also reports lab data from the Lighthouse performance audits. The CrUX API is streamlined and can quickly serve user experience data, making it ideally suited for real-time auditing applications.

Read more at Using the Chrome UX Report API.

Summary

It is unlikely that the introduction of Core Web Vitals as a ranking factor will dramatically change the ranks.

Improving the technical performance of the site is highly unlikely to alone bring traffic to your website. This is one of the many page experience signals that Google uses to feature a site in the search engine results pages.

Search engine optimization is nonetheless hard work, as we are all painfully aware. Hopefully, this set of tools help both companies and consultants get a feel for how their site performs for the different metrics discussed.

So, make sure to access the templates, follow the plug-and-play framework, and start working on improving your site’s user experience.

I expect this dashboard to evolve in time and I would love to see how you customized it or what suggestions would you give for future iterations.

loved your content. please keep up the good word providing such automation for different things. specially for the SEO related things, may be like automating keyword research, its reporting and other cool stuff. Thanks